Proxmox - Keeping Quorum with QDevices

We will develop a way of maintaining high availability with a two or even node count Proxmox cluster deployment. To do this, we will be using what is considered a QDevice.

Introduction

In this post, we will develop a way of maintaining high availability with a two or even node count Proxmox cluster deployment. To do this, we will be using what is considered a QDevice, in the Corosync nomenclature, in order to break ties and keep a quorate in the cluster. This will allow your hypervisors the ability to operate under failure conditions which would otherwise cause outages. The use of QDevice is only recommended for non-production deployments where another full Proxmox node is not feasible. For our purposes, we will be using a Raspberry Pi for the QDevice. Let's begin!

Understanding the Benefits

Proxmox uses the Corosync cluster engine behind the scenes. The Proxmox background services rely on Corosync in order to communicate configuration changes between the nodes in the cluster.

In order to keep synchronization between the nodes, a Proxmox requirement is that at least three nodes must be added to the cluster. This may not be feasible in testing and homelab setups. In two node setups, what happens is that both nodes must always be operational in order for any change, such as starting, stopping, or creating VMs and containers, to be done.

In order to fix this, we can use an external QDevice whose sole purpose is to settle votes during times of node outage. This QDevice will not be a visible component of the Proxmox cluster and cannot run any virtual machine or container on them.

The benefits for adding a QDevice to a cluster include:

- Allowing modifications to the running hypervisor during single node downtime in a two node deployment.

- Settling disputes in even node count deployments.

Creating the QDevice

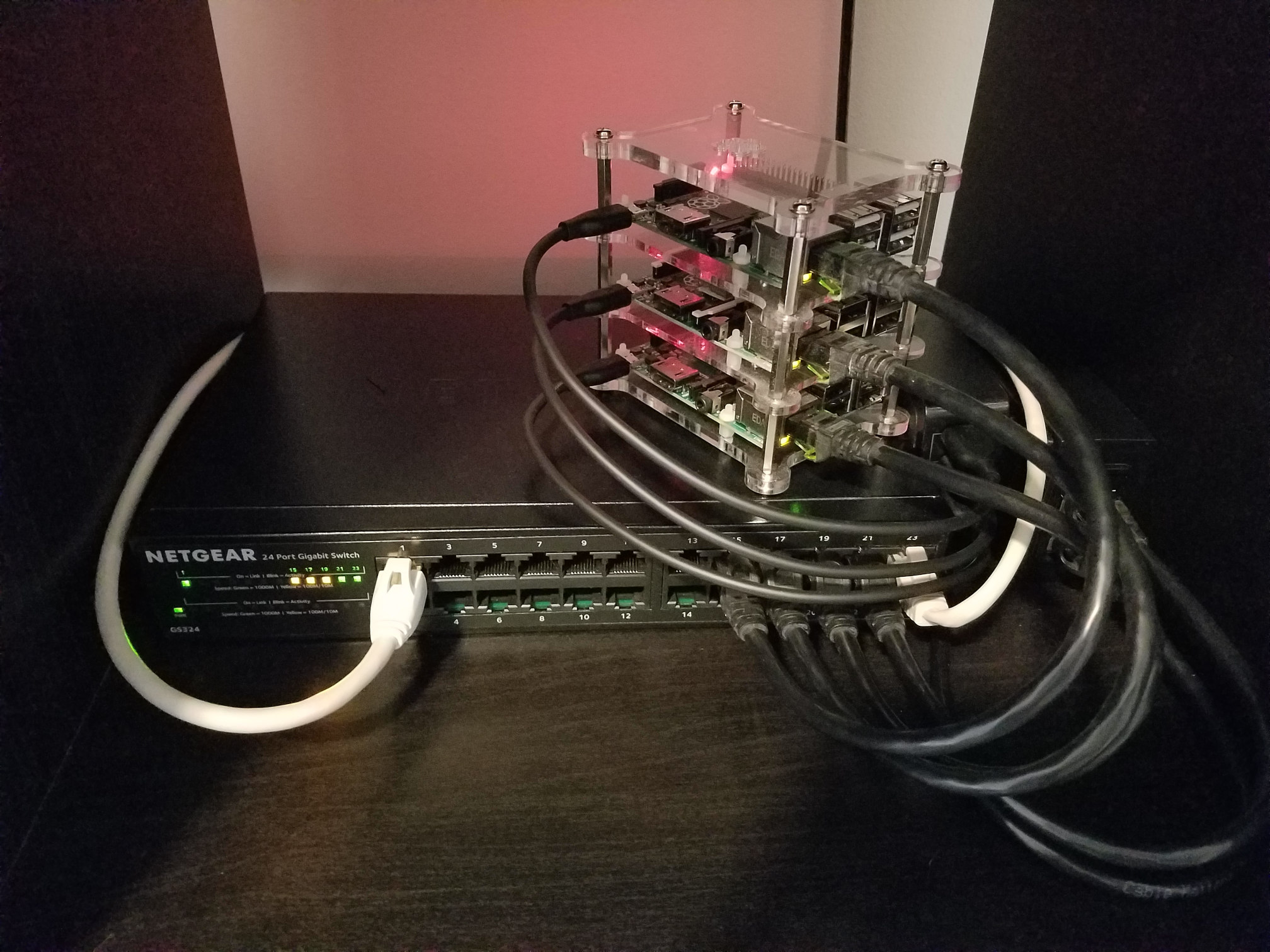

For my purpose, I am using a Raspberry PI 2B and the Arch ARM distribution for a slim Corosync QDevice node. My Proxmox two node cluster is running the latest Proxmox 6 which utilizes Corosync 3.

Installing Dependencies

On the RBP host, we will want to install the following corosync-qdevice dependency in the AUR:

It should be noted that at time of writing, the following dependencies of this package do not include the armv7h arch in the PKGBUILD.

- https://aur.archlinux.org/packages/libcgroup/

- https://aur.archlinux.org/packages/kronosnet/

- https://aur.archlinux.org/packages/corosync/

You can safely add armv7h to the PKGBUILD manually and expect a successful build and install from these packages.

Once the package dependencies are met, we can start and enable the corosync-qnetd systemd service:

sudo systemctl start corosync-qnetd.service

sudo systemctl enable corosync-qnetd.service

Adding the QDevice to the Cluster

On each of the Proxmox nodes, you will need to do the following.

- Make sure the QDevice can be reached via SSH.

- Install Corosync QDevice and QNETd dependencies.

apt install corosync-qnetd corosync-qdevice

Once this is completed, we can run the Proxmox Qdevice setup as below:

pvecm qdevice setup <rbp-ip>

Verification of Success

To verify, you can use the pvecm status command and see that the Qdevice has been added and that it contains a single vote as member of the cluster.

root@pve:~# pvecm status

Cluster information

-------------------

Name: JenCluster

Config Version: 5

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Fri Jan 3 22:13:24 2020

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 0x00000001

Ring ID: 1.2c

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 3

Quorum: 2

Flags: Quorate Qdevice

Membership information

----------------------

Nodeid Votes Qdevice Name

0x00000001 1 A,V,NMW 192.168.1.131 (local)

0x00000002 1 NR 192.168.1.116

0x00000000 1 Qdevice

One important information which should be noted, is that you should remove the QDevice, as noted in the Corosync External Vote Support, before the addition of the Proxmox node to keep an odd node count in the cluster.